PSC2

ABOUT THE PROJECT

Psc2 combines the worlds of jazz, rock, classical music, and artificial intelligence via original compositions and arrangements.

the compositions are built around the interplay of jazz harmonies, complex rhythms and extensive improvisation; many of them are composed in the “long-form” compositional style of classical music.

the arrangements take well-known songs from pop, rock and jazz and re-build them in a manner similar to our original compositions. the arrangements include songs by michael jackson, guns n’ roses, silvio rodriguez, duke ellington and others.

psc is a senior software developer in research in google brain, and is experimenting with using machine learning models (including generative models for music) as part of the live performance. most of the code used will be available in this repo. Psc2 used to be psctrio with 3 human intelligences

Creators: Pablo Samuel Castro, Claudio Palomares

Location: Montreal

Year: 2018

Tags: Artificial intelligence, Performance

NOISY SKELETON

Location: Lyon (France)

Year: 2014

Collaborators: David-Alexandre CHANEL, David GUERRA, Jonathan RICHER

Tags: art installation

Noisy Skeleton is an interactive immersive installation exploring the links between sound, space and artificial intelligence. From complete control to accidental reaction, the spectator is completely surrounded by abstracts visuals and digital soundscape echoed back by the machine. Establishing a real man/machine dialogue, the minimalistic aesthetic and vibrations create a both virtual and physical experience, allowing the user to feel the most subtle variations of sound and space.

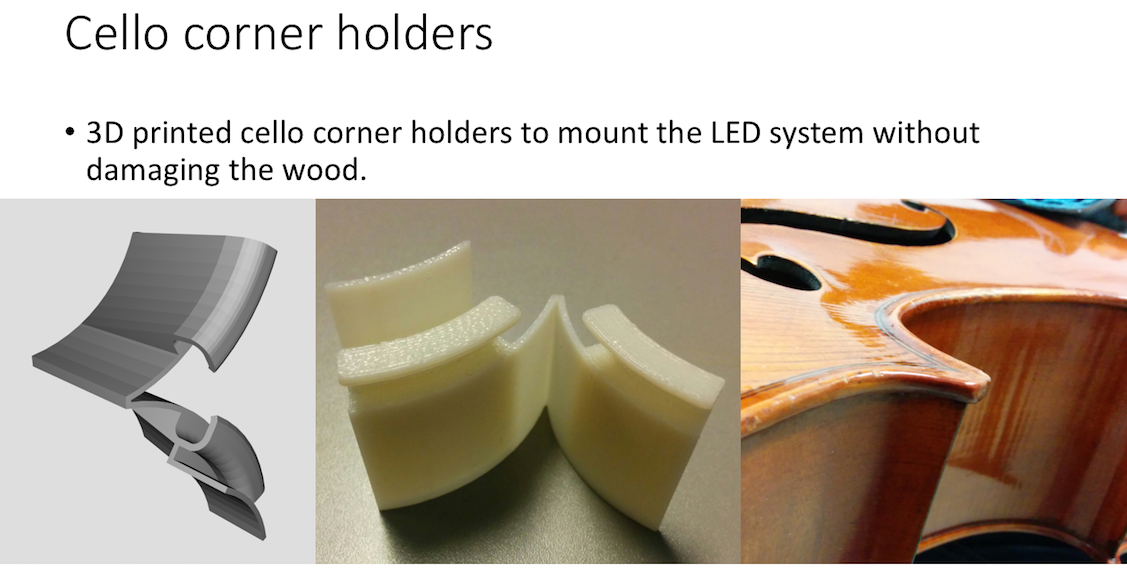

CELLO LIGHT

Visual observation of performance gestures has been shown to play a key role

in audience reception of musical works. As author Luke Windsor points out, “the

gestures that accompany music are potentially a primary manner in which an

audience has direct contact with the performer.” This project, “Multimodal Visual

Cello Augmentation“ proposes to augment audience perception through the

creation of an interactive lighting system responsive to performance gestures

that transforms gestural information into a real-time visual display. Our goals are:

A) to control the interactive lighting (visual display) by mapping the gestural and

biophysical information collected; B) to use the interactive lighting to augment

interpretation of the musical text; C) to make performance practice decisions in

parallel to designing the lighting/gestural interface; D) to identify and display

meaningful information within performance gestures that are not apparent to the

listener; and E) to promote the use of new technologies in the performing arts.

Artists & Collaborators: Juan Sebastian Delgado, Alex Nieva

Location: Montreal (Canada)

Year: 2016

Tags: AV performance